Proxmox OpenVZ Container and KVM Startup and Shutdown Order

Category : How-to

From time to time you will need to shutdown and startup your Proxmox hardware node. There are many reasons for this such as adding new hardware or to apply new kernel updates.

From time to time you will need to shutdown and startup your Proxmox hardware node. There are many reasons for this such as adding new hardware or to apply new kernel updates.

Before you shutdown the hardware node you must cleanly shutdown all OpenVZ containers and all KVMs. To avoid having to do this manually, Proxmox will issue all running containers and VMs the shutdown command as part of the hardware nodes shutdown procedure. Proxmox also gives us the option to specify which containers and VMs should be started when the hardware node is turned on. This means that the final part of the hardware nodes startup sequence will be to start your containers and VMs. Depending on your environment, this may need to be done in a specific order. For example you may wish your database VM or container to be started before your web application.

Enable automatic startup of containers and VMs

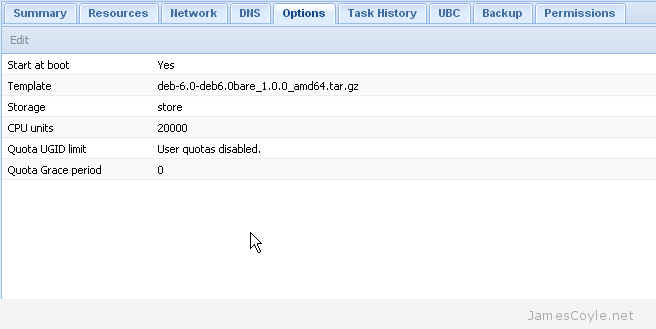

To enable the automatic startup of an OpenVZ container or VM you must specify the Start at boot attribute in the Proxmox web interface. Simply click on the Options tab of the container or VM and double click the Start at boot attribute.

Make sure the checkbox is ticked in the new dialogue box which pops up and click OK.

Your container or VM will now automatically be started when your hardware node next starts.

Startup Order for VMs

It is sometimes necessary to specify which containers and VMs startup first and how long the hardware node should wait until issuing the next startup command. This is easy for KVMs as this can be done through the Proxmox web interface.

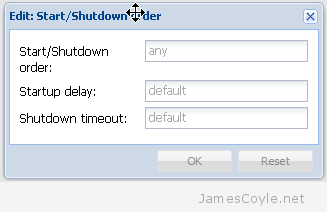

Simply click on the Options tab of the container or VM and double click the Start/Shutdown order attribute.

You can specify the following attributes for the KVM:

- Start/Shutdown order – this is the order the VMs will be started in. For example, setting this to 1 will mean that this VM will be instructed to start first. You can specify the same number for multiple VMs which means that all VMs of the same number will be instructed to start at the same time. The reverse order is used when the machines are automatically shutdown.

- Startup delay – this is the time in seconds which Proxmox will wait until moving on to the next priority. If you set a VM with a Start/Shutdown order of 1 with a Startup delay of 30, Proxmox will wait 30 seconds until instructing the VM with a Start/Shutdown order of 2 to start.

- Shutdown timeout – when a VM is asked to shutdown, an ACPI shutdown request is sent to the VM which should initiate the guests shutdown procedure. If ACPI requests are not supported by the guest, or an exception occurs during shutdown the process may not complete. In this case the Proxmox will wait for the Shutdown timeout threshold to pass before forcefully terminate the VM. If no value is specified then the defaults are used which are 60 seconds for Containers and 180 seconds for VMs.

Any VMs which have the Start at boot attribute enabled but no Start/Shutdown order attribute will be started after all VMs with a Start/Shutdown order attribute set have been processed.

Startup Order for OpenVZ Containers

Unfortunately Proxmox has not provided this functionality in the web based GUI for OpenVZ containers. To specify the order for a container we need to use the command line on the Proxmox hardware node.

Login to your Proxmox hardware node as the root user and issue the below command to set the machine startup order. You will need to change [PRIORITY] to the priority value to use and [VMID] to the ID of the container.

vzctl set [VMID] –bootorder [PRIORITY] -save

You can use vzlist if you do not know the [VMID] of the container.

vzlist CTID NPROC STATUS IP_ADDR HOSTNAME 100 32 running - test.jamescoyle.net

You must have already enabled Start at boot for the container to start up. Any containers which have the Start at boot attribute enabled but no Start/Shutdown order attribute will be started after all containers with a Start/Shutdown order attribute set have been processed.

You can spend hours tweaking the settings of a MySQL server instance to get the best possible performance for your hardware and environment. The hardest part is to ensure that the changes made are reflected with increased performance.

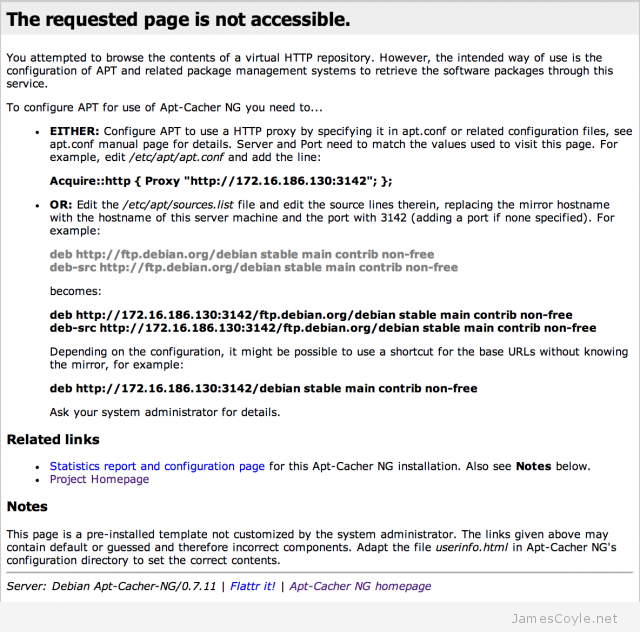

You can spend hours tweaking the settings of a MySQL server instance to get the best possible performance for your hardware and environment. The hardest part is to ensure that the changes made are reflected with increased performance. apt-get is the tool used in Debian and Ubuntu to manage packages installed on the system. Each time an update is available, or you install a new package the files will be downloaded from one of the central repository servers out on the internet and installed on your system.

apt-get is the tool used in Debian and Ubuntu to manage packages installed on the system. Each time an update is available, or you install a new package the files will be downloaded from one of the central repository servers out on the internet and installed on your system.

With Git you are able to define file exceptions to exclude certain files and folders from git repository commits. You can create files which contain a list of patterns which git will check against on each git add and ignore any matching files.

With Git you are able to define file exceptions to exclude certain files and folders from git repository commits. You can create files which contain a list of patterns which git will check against on each git add and ignore any matching files.