Move Proxmox Container to Different Storage (Updated for LXC)

Category : How-to

The Proxmox Web GUI does not give us the ability to migrate a container from one storage device to another directly. To move a container onto different storage we have to take a backup of the container and restore it to the same ID with a different storage device specified. This can be time laborious when working with several containers.

The Proxmox Web GUI does not give us the ability to migrate a container from one storage device to another directly. To move a container onto different storage we have to take a backup of the container and restore it to the same ID with a different storage device specified. This can be time laborious when working with several containers.

This is an update to the OpenVZ script found here.

The below script allows you to move an LXC container from one storage device to another. The process requires that the container be stopped, which the script will handle.

Save the below script into a file called migrate.

vi migrate

#!/bin/bash

#

# Filename : migrate

# Description : Migrate Proxmox OpenVZ container from one storage to another

# Author : James Coyle

#

# Version:

# -Date -Author -Description

# 20-11-2013 James Coyle Initial

# 13-12-2017 James Coyle Changes for LXC

#

#

# Variables

TMP=/tmp #Location to use to create the backup for transferring to new storage. This needs to be big enough to store the backup archive for the container.

# Do not edit

usage() {

echo "Usage: $0"

echo " [-c Required: Container ID to migrate <int>] "

echo " [-s Required: Target storage ID <string>]"

echo " [-d Optional: Delete the backup file after CT restoration <boolean>]"

echo ""

echo "Example: $0 -c 100 -s nasarray"

echo ""

exit 1;

}

while getopts "c:s:d" o; do

case "${o}" in

c)

CT=${OPTARG}

;;

s)

TARGET_STORAGE=${OPTARG}

;;

d)

DELETE=true

;;

*)

usage

;;

esac

done

shift $((OPTIND-1))

# Check mandatory fields

if [ -z "${CT}" ] || [ -z "${TARGET_STORAGE}" ]; then

usage

fi

RUNNING=false

set -e

set -o pipefail

echo "Moving $CT to $TARGET_STORAGE..."

if pct list| fgrep -w -q "$CT" | grep "running"

then

RUNNING=true

fi

if $RUNNING

then

pct stop $CT

fi

vzdump --dumpdir $TMP $CT

ARCHIVE=$(ls -t $TMP/vzdump-lxc-$CT-*.tar | head -n 1)

pct restore $CT $ARCHIVE -force -storage $TARGET_STORAGE

if $RUNNING

then

pct start $CT

fi

if $DELETE

then

LOG=$(ls -t $TMP/vzdump-lxc-$CT-*.log | head -n 1)

echo "Deleting $LOG and $ARCHIVE"

rm -rf $ARCHIVE $TMP/$LOG

fi

Set execution permissions on the script:

chmod + x migrate

The script has several parameters which are detailed below:

- -d is specified if you would like the script to delete the temporary backup after the process has completed. Leave this out if you would like the backup tar file to be kept, just in case anything goes wrong.

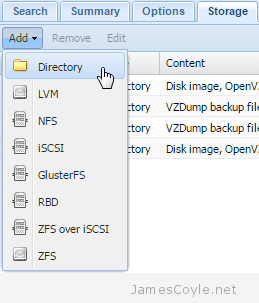

- -s is required to specify the name of the target storage. You can find this from the Proxmox Web GUI.

- -c is required for the container ID to migrate.

In addition, the script contains the variable TMP. This will be the location of the backup tar created as part of the migration process and must contain enough space to store the content of the container being migrated. You can change this to suit your environment.

Example command:

./migrate -d -s newstorage -c 101

Renaming a Linux server’s hostname is usually a trivial task, and that’s no Different to a Proxmox server providing it’s not part of a cluster. If your machine is in a cluster then things get a bit more complicated and that’s a blog subject for another day.

Renaming a Linux server’s hostname is usually a trivial task, and that’s no Different to a Proxmox server providing it’s not part of a cluster. If your machine is in a cluster then things get a bit more complicated and that’s a blog subject for another day.