Install AWS CodeDeploy Agent on Linux

Category : How-to

AWS CodeDeploy Agent is the agent that runs deploy jobs on EC2 instances. Before a CodeDeploy job will run you’ll need to make sure the agent is installed, running, and has the correct IAM permissions to execute.

For more information on AWS CodeDeploy, please see: https://aws.amazon.com/rds/

Installation is straight forward on Linux and will have your agent up and running in no time.

The below example is based on Ubuntu, but the same steps would be used on other distributions, with the exception of the package manager for installing ruby.

As the root user, run the below commands. root is required because the deployment could be performing actions that require elevated privileges. Ruby is an installation dependency for AWS CodeDeploy and must be available before installing the agent itself.

apt update

apt -y install rubyOnce Ruby is installed we can download and install the CodeDeploy agent. The below agent is being downloaded from the eu-central-1 region, but you can replace the region with your local region if required. Other than saving bandwidth charges for the download (which will be tiny) there is no real reason to do so.

cd /tmp

wget https://aws-codedeploy-eu-central-1.s3.amazonaws.com/latest/install;

chmod +x ./install

./install autoThe final step is to start the agent and check that it’s running. A systemd entry will be added and needs to be called to start the agent.

service codedeploy-agent startFinally, check that the agent is running by checking the log. You should be looking for a similar output to the below.

tail -f /var/log/aws/codedeploy-agent/codedeploy-agent.log

2019-11-24 07:30:54 INFO [codedeploy-agent(31022)]: master 31017: Spawned child 1/1

2019-11-24 07:30:54 INFO [codedeploy-agent(31022)]: On Premises config file does not exist or not readable

2019-11-24 07:30:54 INFO [codedeploy-agent(31022)]: InstanceAgent::Plugins::CodeDeployPlugin::CommandExecutor: Archives to retain is: 5}

2019-11-24 07:30:54 INFO [codedeploy-agent(31022)]: Version file found in /opt/codedeploy-agent/.version with agent version OFFICIAL_1.0-1.1597_deb.

2019-11-24 07:30:54 INFO [codedeploy-agent(31017)]: Started master 31017 with 1 children

2019-11-24 07:31:54 INFO [codedeploy-agent(31022)]: [Aws::CodeDeployCommand::Client 200 61.547075 0 retries] poll_host_command(host_identifier:"xxxx")See here for the installation steps combined into a single script.

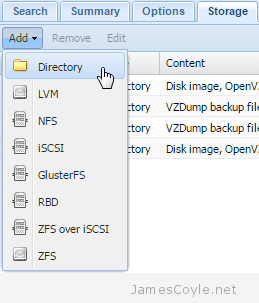

The Proxmox Web GUI does not give us the ability to migrate a container from one storage device to another directly. To move a container onto different storage we have to take a backup of the container and restore it to the same ID with a different storage device specified. This can be time laborious when working with several containers.

The Proxmox Web GUI does not give us the ability to migrate a container from one storage device to another directly. To move a container onto different storage we have to take a backup of the container and restore it to the same ID with a different storage device specified. This can be time laborious when working with several containers. Currently, version 2.0 of CouchDB doesn’t come with any form of startup script. I’m sure that as the CouchDB 2 branch becomes more mature and it’s added to the various software repositories startup scripts will be shipped as standard, but until then we have to make do.

Currently, version 2.0 of CouchDB doesn’t come with any form of startup script. I’m sure that as the CouchDB 2 branch becomes more mature and it’s added to the various software repositories startup scripts will be shipped as standard, but until then we have to make do. Oracle Java is one of the biggest problems in life. That’s just a fact. Half of it is the fact that you have to download it directly from Oracle each time, quarter of it is the almost daily updates (compounded by the first problem), and the remainder is dealing with the fact that the first problem is there by design.

Oracle Java is one of the biggest problems in life. That’s just a fact. Half of it is the fact that you have to download it directly from Oracle each time, quarter of it is the almost daily updates (compounded by the first problem), and the remainder is dealing with the fact that the first problem is there by design. The following process will install the DataStax distribution of Cassandra on any Debian based system, such as Debian or Ubuntu. We’ll use the official DataStax apt repositories to install Cassandra using apt-get.

The following process will install the DataStax distribution of Cassandra on any Debian based system, such as Debian or Ubuntu. We’ll use the official DataStax apt repositories to install Cassandra using apt-get.