Setup Glusterfs with a replicated volume over 2 nodes

Category : How-to

This post will show you how to install GlusterFS in Ubuntu/ Debian however the steps will be similar with Red Hat based linux operating systems with minor changes to the commands.

This post will show you how to install GlusterFS in Ubuntu/ Debian however the steps will be similar with Red Hat based linux operating systems with minor changes to the commands.

Gluster File System is a distributed files system allowing you to create a single volume of storage which spans multiple disks, multiple machines and even multiple data centres.

Before we get started, install the required packages using apt-get. With Red Hat/ Cent based operating systems you will need to use yum, or download the package directly from http://download.gluster.org/pub/gluster/glusterfs/3.4/LATEST/

apt-get install glusterfs-server

Perform this on both of your servers. If you have more than two servers, perform this command on all of the servers required for the volume.

You will now need each of these servers to know about the others. Run gluster peer probe and the ip address of all the other servers in your GlusterFS cluster.

gluster peer probe gfs2.jamescoyle.net

Each of the commands should return with Probe successful which means the node is now known to this machine. You will only need to do this on one node of your cluster.

Run gluster peer status to check each node in your cluster is aware of the other nodes:

gluster peer status

The result should look like:

Number of Peers: 1 Hostname: gfs2.jamescoyle.net Uuid: a0977ca2-6e47-4c1a-822b-99df896080ee State: Peer in Cluster (Connected)

Now we need to create the volume where the data will reside. the volume will be called datastore. First of all, we need to identify where on the host this storage is. For this example, it is /mnt/gfs_block on both nodes, but this could be any mount point of storage that you have. If the folder does not exist, it will be silently created so be sure to get the correct path on all nodes.

gluster volume create datastore replica 2 transport tcp gfs1.jamescoyle.net:/mnt/gfs_block gfs2.jamescoyle.net:/mnt/gfs_block

If this has been sucessful, you should see:

Creation of volume testvol has been successful. Please start the volume to access data.

As the message indicates, we now need to start the volume:

gluster volume start datastore

And wait for the message that is has started.

Starting volume testvol has been successful

Running either of the below commands should indicate that GlusterFS is up and running. The ps command should show the command running with both servers in the argument. netstat should show a connection between both nodes.

ps aux | grep gluster netstat -tap | grep glusterfsd

As a final test, to make sure the volume is available, run gluster volume info. An example output is below:

gluster volume info Volume Name: datastore Type: Replicate Status: Started Number of Bricks: 2 Transport-type: tcp Bricks: Brick1: gfs1.jamescoyle.net:/mnt/datastore Brick2: gfs2.jamescoyle.net:/mnt/datastore

That’s it! You now have a GlusterFS volume which will maintain replication across two nodes. To see how to use your volume, see our guide to mounting a volume.

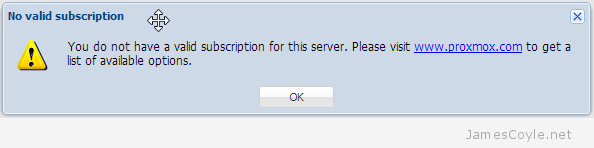

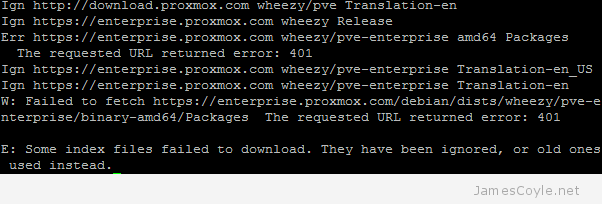

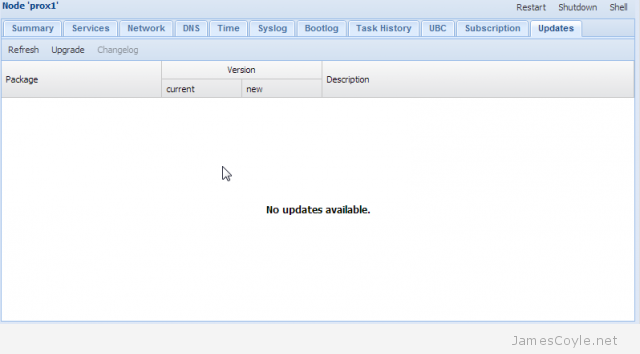

Proxmox version 3.1 made a huge change to the way updates are managed. Prior to version 3.1, updates for the PVE software were managed from two main repositories – test and production – both of which were free and available. Generally the production repositories were used to ensure a stable and secure Proxmox host. These two repositories have now changed to the following:

Proxmox version 3.1 made a huge change to the way updates are managed. Prior to version 3.1, updates for the PVE software were managed from two main repositories – test and production – both of which were free and available. Generally the production repositories were used to ensure a stable and secure Proxmox host. These two repositories have now changed to the following:

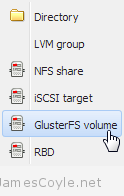

This is a new storage plugin which can be used to add usable storage to your Proxmox host. GlusterFS is an open source, distributed file system with potential to house a huge capacity of data.

This is a new storage plugin which can be used to add usable storage to your Proxmox host. GlusterFS is an open source, distributed file system with potential to house a huge capacity of data.

If you need a software firewall to shield containers on a Proxmox stack, you should always use a firewall on the host to decide what traffic is allowed for each container. This brings some obvious benefits such as it’s centrally managed – one configuration location for all containers on the node, and security as a compromised container cannot change firewall settings.

If you need a software firewall to shield containers on a Proxmox stack, you should always use a firewall on the host to decide what traffic is allowed for each container. This brings some obvious benefits such as it’s centrally managed – one configuration location for all containers on the node, and security as a compromised container cannot change firewall settings.