Bonnie++ is a disk and file system benchmarking tool for measuring I/O performance. With Bonnie++ you can quickly and easily produce a meaningful value to represent your current file system performance.

Bonnie++ is a disk and file system benchmarking tool for measuring I/O performance. With Bonnie++ you can quickly and easily produce a meaningful value to represent your current file system performance.

Before using Bonnie++ make sure that you have it installed on your system. In Ubuntu, use apt-get to install the bonnie++ package.

apt-get install bonnie++

Run the bonnie++ command with the following attributes:

- [TEST_LOCATION] – is where bonnie++ will create the benchmark operations.

- [TEST_SIZE] – the size of the test file – this should be greater than double the RAM in your system.

- [TEST_NAME] – this is simply a label which will be written out with the results.

- [TEST_USER] – the user who should perform the test. This is not required if you are not running as root.

bonnie++ -d [TEST_LOCATION] -s [TEST_SIZE] -n 0 -m [TEST_NAME] -f -b -u [TEST_USER]

For example:

bonnie++ -d /tmp -s 4G -n 0 -m TEST -f -b -u james

Using uid:1000, gid:1000.

Writing intelligently...done

Rewriting...done

Reading intelligently...done

start 'em...done...done...done...done...done...

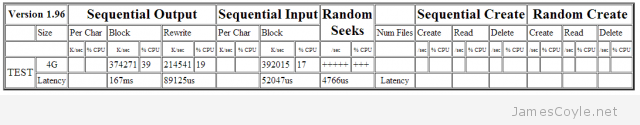

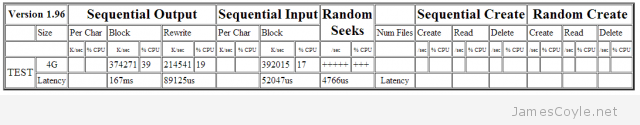

Version 1.96 ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

TEST 4G 374271 39 214541 19 392015 17 +++++ +++

Latency 167ms 89125us 52047us 4766us

1.96,1.96,TEST,1,1387339401,4G,,,,374271,39,214541,19,,,392015,17,+++++,+++,,,,,,,,,,,,,,,,,,,167ms,89125us,,52047us,4766us,,,,,,

The easiest way to understand the results of a bonnie++ test is to run the output through the bon_csv2html utility. This perl script uses the bonnie++ results and generates a HTML page which you can later open with your web browser.

Copy the last line of the bonnie++ output into the echo command to replace [RESULTS] and alter the [OUTPUT] path to point to where you would like to save your results.

echo "[RESULTS]" | bon_csv2html > [OUTPUT]

Example command:

echo "1.96,1.96,TEST,1,1387339401,4G,,,,374271,39,214541,19,,,392015,17,+++++,+++,,,,,,,,,,,,,,,,,,,167ms,89125us,,52047us,4766us,,,,,," | bon_csv2html > /tmp/test.html

Finally open the output file with your web browser.

See my other post on using bonnie++ to benchmark your file system.

GlusterFS can be used to synchronise a directory to a remote server on a local network for data redundancy or load balancing to provide a highly scalable and available file system.

GlusterFS can be used to synchronise a directory to a remote server on a local network for data redundancy or load balancing to provide a highly scalable and available file system. There is a handy command called showmount which displays all the active folder exports on an NFS server. This can be handy when trying to connect to a new NFS export from a remote machine as you can see if the export is available in the NFS server.

There is a handy command called showmount which displays all the active folder exports on an NFS server. This can be handy when trying to connect to a new NFS export from a remote machine as you can see if the export is available in the NFS server.

Usually a web server can be accessed by multiple paths, such as the DNS entry of the server (eg. as www.jamescoyle.net) and the IP address of the server (eg. 10.10.10.1). This is a problem when it comes to presenting a single entry point to your website.

Usually a web server can be accessed by multiple paths, such as the DNS entry of the server (eg. as www.jamescoyle.net) and the IP address of the server (eg. 10.10.10.1). This is a problem when it comes to presenting a single entry point to your website.